Towards Explainable Proactive Robot Interactions for Groups of People in Unstructured Environments

Abstract

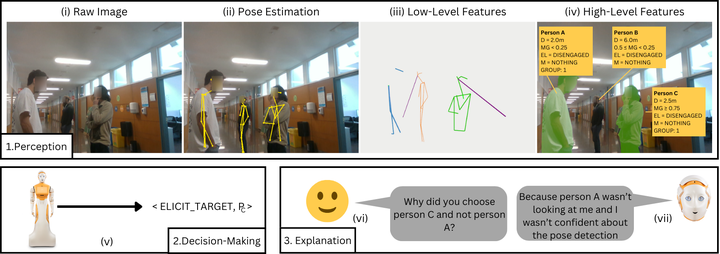

For social robots to be able to operate in unstructured public spaces, they need to be able to gauge complex factors such as human-robot engagement and inter-person social groups, and be able to decide how and with whom to interact. Additionally, such robots should be able to explain their decisions after the fact, to improve accountability and confidence in their behavior. To address this, we present a two-layered proactive system that extracts high-level social features from low-level perceptions and uses these features to make high-level decisions regarding the initiation and maintenance of human-robot interactions. With this system outlined, the primary focus of this work is then a novel method to generate counterfactual explanations in response to a variety of contrastive queries. We provide an early proof of concept to illustrate how these explanations can be generated by leveraging the two-layer system.