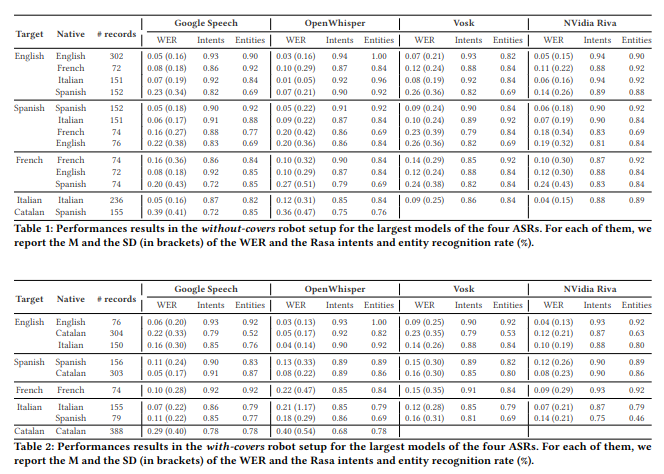

Dataset and Evaluation of Automatic Speech Recognition for Multi-lingual Intent Recognition on Social Robots

Abstract

While Automatic Speech Recognition (ASR) systems excel in controlled environments, challenges arise in robot-specific setups due to unique microphone requirements and added noise sources. In this paper, we create a dataset of common robot instructions in 5 European languages, and we systematically evaluate current state-of-art ASR systems (Vosk, OpenWhisper, Google Speech and NVidia Riva). Besides standard metrics, we also look at two critical downstream tasks for human-robot verbal interaction: intent recognition rate and entity extraction, using the open-source Rasa framework. Overall, we found that open-source solutions as Vosk performs competitively with closed-source solutions while running on the edge, on a low compute budget (CPU only).