User Interactions and Negative Examples to Improve the Learning of Semantic Rules in a Cognitive Exercise Scenario

Abstract

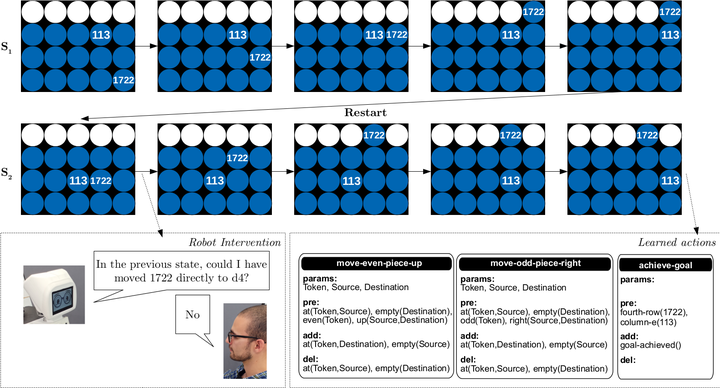

Enabling a robot to perform new tasks is a complex endeavor, usually beyond the reach of non-technical users. For this reason, research efforts that aim at empowering end-users to teach robots new abilities using intuitive modes of interaction are valuable.In this article, we present INtuitive PROgramming 2 (INPRO2), a learning framework that allows inferring planning actions from demonstrations given by a human teacher. INPRO2 operates in an assistive scenario, in which the robot may learn from a healthcare professional (a therapist or caregiver) new cognitive exercises that can be later administered to patients with cognitive impairment. INPRO2 features significant improvements over previous work, namely: (1) exploitation of negative examples; (2) proactive interaction with the teacher to ask questions about the legality of certain movements; and (3) learning goals in addition to legal actions. Through simulations, we show the performance of different proactive strategies for gathering negative examples. Real-world experiments with human teachers and a TIAGo robot are also presented to qualitatively illustrate INPRO2.